Right now, we're in that cuddly phase where newsletter writers are dabbling with Chat-GPT's "DALL-E" image maker to create free illustrations like the one above warning of our future robot overlords.

This, of course, is not good news for illustrators who used to get paid for that kind of work.

Meanwhile, creative people of all types—from Hollywood writers and actors to journalists to novelists to photographers to designers to musicians to songwriters to voice-over artists—are waking up to the realization that AI can already approximate enough of what they do to make them redundant. Literally.

AI is eating the internet

As even The New Yorker acknowledges, 2023 was the year A.I. ate the internet.

Celebrities are already sliding into the DMs of AI-generated Instagram influencers.

There's already a "TIME 100" list just for AI.

We're heading into an election year in which AI-generated lies will take a Midjourney around the world before the truth puts on its Balenciaga sneakers.

In March 2023, Pablo Xavier, the 31-year-old construction worker whose viral, AI-generated images of The Pope in a Balenciaga puffy coat fooled millions, told Buzzfeed News he was "tripping on shrooms" when he came up with the idea to create the images using the Midjourney app.

Of course, not everyone will be just tripping on shrooms and looking for laughs when they post content online.

The bad guys will be flooding the zone, too.

In fact, many experts predict that within months more than 90% of new internet content will be AI-generated.

Oh, the things you can do

Even if you don't want to write a novel in one day using ChatGPT, there are now dozens of AI-powered apps you can use to create the kind of content that previously required highly creative and/or highly skilled humans—and took a lot of time.

With AI you can do these things quickly and, quite often, at no cost.

Eleven Labs lets you turn text to speech for free using "natural AI voices" in 29 different languages. You can even clone your own voice in minutes.

Suno will let you write a song about anything or anyone with a simple prompt—and give you a free audio or video file featuring AI-singers and music in a minute or two. With a couple of additional apps, you're just minutes away from turning it into an MTV-quality animated music video.

At HeyGen, you can get started for free to "effortlessly produce studio quality videos with AI-generated avatars and voices." You can even use HeyGen to generate a video-plus-voice avatar of yourself that will let you always look your best and remain ageless on your YouTube channel for years to come.

Whether you want to animate photos or make your photos talk, turn doodles into artworks, or create a special-effects filled movie trailer in minutes, there's an AI for that.

For creative professionals, being aware of what's possible is now essential. As the founders of Eleven Films told me recently, "AI... is a powerful tool to assist in the creation of SOME content," even for a "very nuts and bolts, old-school GenX company" like theirs.

But beyond the utility, they also saw danger, saying: "We are teetering on the brink of fascism and the propaganda that is attainable now with AI should scare EVERY voter."

The big question: Should we be worried? Or should we just relax and trust that the small cadre of tech bros whose first attempts at AI created systems that were riddled with bias and tainted by racism finally have everything under control?

The answer: Be worried. As OpenAI CEO Sam Altman told TIME in December 2023: "No one knows what happens next."

Obviously, we need to be scared of the bad guys

In the drama that surrounded the firing and reinstatement of OpenAI CEO Sam Altman in November, there was much debate over the role played by Ilya Sutskever, OpenAI's Chief Scientist.

In favoring the ouster, Sutskever sided with board members whose safety concerns may have been compounded by the notion that the suddenly famous Altman's rush to accelerate the commercialization of AI was at odds with OpenAI's dual mission: "to build AI that’s smarter than humanity, while also making sure that AI would be safe and beneficial to humanity."

Sutskever has long been aware of the potential—and potential dangers—of artificial intelligence, especially when it reaches what OpenAI and others call "Artificial General Intelligence" (AGI). (IBM calls it Strong AI: "a theoretical form of AI that replicates human functions, such as reasoning, planning, and problem-solving.")

The Guardian recently highlighted on YouTube some of what Sutsekever said in a series of interviews conducted between 2016 and 2019—i.e. years before ChatGPT took the world by storm—for the 2019 documentary iHuman.

At the start of the Guardian video, Sutskeyer says the usual positive stuff:

AI is a great thing, because AI will solve all the problems that we have today. It will solve employment. It will solve disease. It will solve poverty.

It's the kind of rosy view of AI's potential—especially in areas such as healthcare—still being voiced by tech luminaries such as Bill Gates in his predictions for 2024.

But even back in the Trump-era, Ilya Sutskeyer was warning that bad guys would use AI for nefarious purposes:

The problem of fake news is going to be a million times worse. Cyber attacks will become much more extreme. We will have totally automated AI weapons. I think AI has the potential to create infinitely stable dictatorships.

When it came to AGI, Sutskeyer was clear on the safety concerns. Because once an AI gets smarter than the people who made it, once it starts to think for itself, and build things by itself, it may start looking down on the people who made it:

You're going to see dramatically more intelligent systems and I think it's highly likely that those systems will have completely astronomical impact on society.

Will humans actually benefit? And who will benefit, who will not?

The beliefs and desires of the first AGIs will be extremely important and so it's important to program them correctly. I think that if this is not done, then the nature of evolution, of natural selection, favors those systems that prioritize their own survival above all else.

It's not that it's going to actively hate humans and want to harm them, but it is going to be too powerful and I think a good analogy would be the way humans treat animals. It's not we hate animals. I think humans love animals and have a lot of affection for them. But when the time comes to build a highway between two cities, we are not asking the animals for permission. We just do it because it's important for us. And I think by default that's the kind of relationship that's going to be between us and AGIs which are truly autonomous and operating on their own behalf.

The big question: Once AGI is possible—and some believe OpenAI has already achieved it internally—how worried should we be that bad guys start using it?

The answer: Very worried. As OpenAI CEO Sam Altman told TIME in December 2023: "We put these tools out into the world and people... use them to architect the future."

Worrying about the bad guys isn't enough. We also need to be scared of the good guys

If you're old enough to remember Mark Zuckerberg being named TIME's Person of the Year in 2010, you might recall him being praised for his Pollyannish vision for social media. Zuckerberg circa 2010 was still imagining a world filled with old friends who not only reconnect with you on Facebook, but also start tracking your movements to make sure they can say hi (or take care to avoid you) every time they find themselves in the vicinity of your restaurant table or supermarket aisle.

What actually happened was that, in his relentless pursuit of profits over people, Zuckerberg allowed Facebook to become a global misinformation machine and a continued threat to children's health and democracy—committing massive, journalism-destroying fraud on media companies, even as it allowed regimes and bad actors around the world to fuel violence and instability.

In a non-Taylor Swift year, Sam Altman might have been named TIME's Person of the Year for 2023.

Instead he received a participation trophy. TIME named him "TIME CEO of the Year."

TIME Managing Editor Sam Jacobs interviewed Altman on stage at the "A Year in TIME" event on 12 December 2023.

Altman says some of the usual positive stuff:

By the time the end of this decade rolls around, I think the world is going to be in an unbelievably better place... think of how different the world can be... (looking beyond ChatGPT)... every person has the world's best chief of staff, and then after that, every person has a company of 20 or 50 (AI) experts that can work super well together. And then after that everybody has a company of ten thousand experts in every different field that can work super well together.

If you're excited at the prospect of a Chief of Staff funneling the best, most customized advice of 10,000 experts to you each day, you may be less excited by the fact that you don't have a job.

Because, as TIME's CEO of the Year made clear earlier in 2023, AI doesn't just mean creativity apps, factory robots and self-driving cars: It's now coming for all the white-collar jobs.

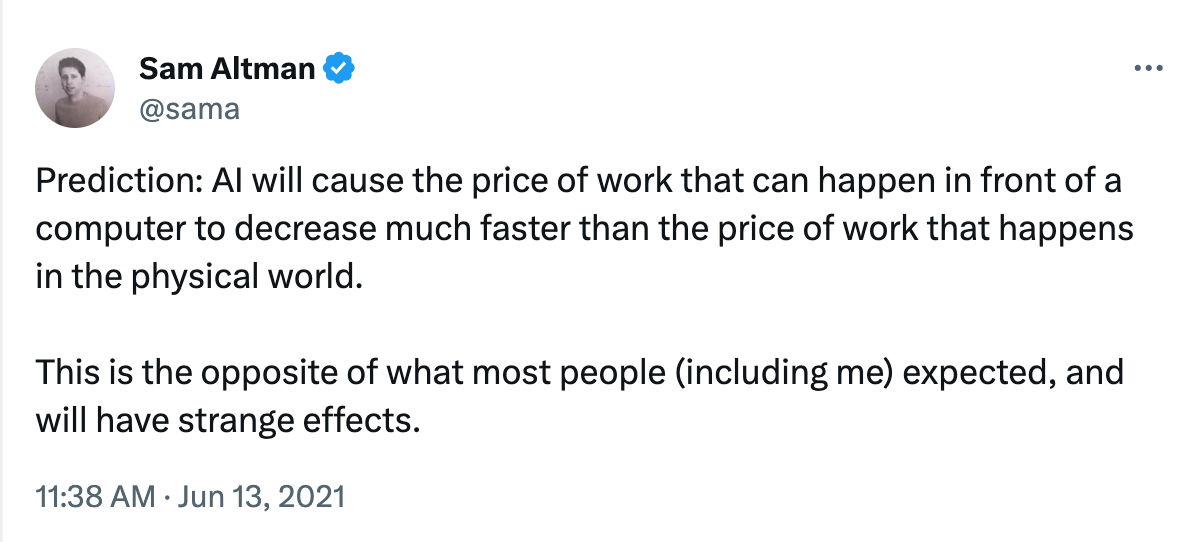

This is not news to his Twitter followers. He tweeted as much back in 2021:

But I'll save the issue of post-labor economics for another day.

For now, let's get back to the dangers of AGI.

To TIME, Altman acknowledges AGI's power and its downsides. But listen to him carefully and he seems to be admitting it's either already here or will be before the next election:

AGI will be the most powerful technology that humanity has ever invented... but there are going to be real downsides. One of those, and there will be many... is going to be around the persuasive ability of these models and the ability of them to affect elections next year.

Altman even imagines how—in 2024—AGI's "persuasive ability" could be specifically applied on the individual level:

The thing that I’m more concerned about is what happens if an AI reads everything you’ve ever written online, every article, every tweet, every everything and then right at the exact moment sends you one message customized for you that really changes the way you think about the world. That's like a new kind of interference that wasn't possible before AI.

The big question: If the kind of AGI that can "affect elections next year" and ultimately create "infinitely stable dictatorships" already exists, how reassured can we be that the cavalry will rush in to save us?

The answer: The cavalry isn't coming.

"An existential threat to humanity"

In May 2023, an international group of doctors and public health experts said the threat of AI in the 2020s was as grave as the threat posed by nuclear weapons in the 1980s.

As Tina Reed wrote in Axios, the group's concerns included:

- Potential surveillance applications and information campaigns that would "further undermine democracy by causing a general breakdown in trust or by driving social division and conflict, with ensuing public health impacts."

- The development of future weapons systems which could be capable of locating, selecting and killing "at an industrial scale" without the need for human supervision.

- AI's potential impact on jobs—and the adverse health impacts of unemployment.

On 18 December 2023, six days after Sam Altman spoke at the "A Year in TIME" event, OpenAI announced a new, 27-page "Preparedness Framework" to "track, evaluate, forecast, and protect against catastrophic risks posed by increasingly powerful models."

As Axios's Ina Fried explained, the new framework:

Proposes using a matrix approach, documenting the level of risk posed by frontier models across several categories, including risks such as the likelihood of bad actors using the model to create malware, social engineering attacks, or to distribute harmful nuclear or biological weapons info.

The new Preparedness team—currently staffed by only four people—is focused on the next phase of AI ("frontier") development and sits between a safety team that, writes Fried, "focuses on mitigating and addressing the risks posed by the current crop of tools" and "a superalignment team (that) looks at issues posed by future systems whose capabilities outstrip those of humans."

I've seen enough January 6th videos to know that four people linking arms to protect the world from everything the "bad actors" can throw at us probably won't be enough.

So enjoy your AI apps while you can.

Because while AI was fun in 2023 the way Facebook was fun in 2010, things will only accelerate from here.

There are "catastrophic risks" ahead.

And you can believe me and Sam Altman when we tell you: "no one knows what happens next."

Thanks to my paid subscribers, this newsletter comes to you ad-free and with no paywall. If you haven't already, please sign up now to get future issues delivered free by email... or, if you can, support with a paid annual subscription for just $2/month.

Subscribe to Unprecedented

Subscribe to the newsletter and unlock access to member-only content.